Introduction

§ 1 In 1998 two medieval musicologists at Oxford and Royal

Holloway, Margaret Bent and Andrew Wathey, started work on a

facsimile volume in the long-running series, Early

English Church Music (EECM). They took the

then-innovative decision to acquire digital rather than

analog images for this project: all prior facsimiles had

relied on black & white glossies or transparencies.

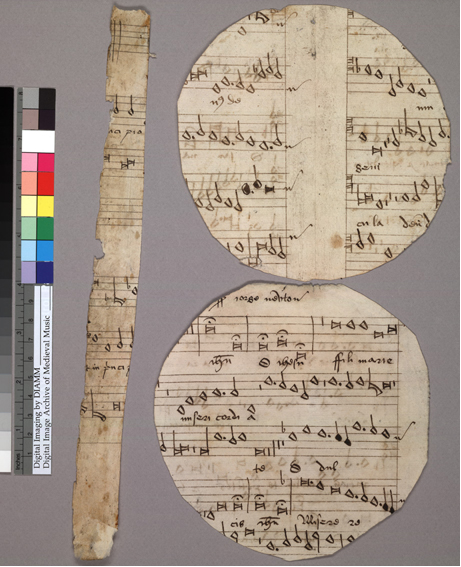

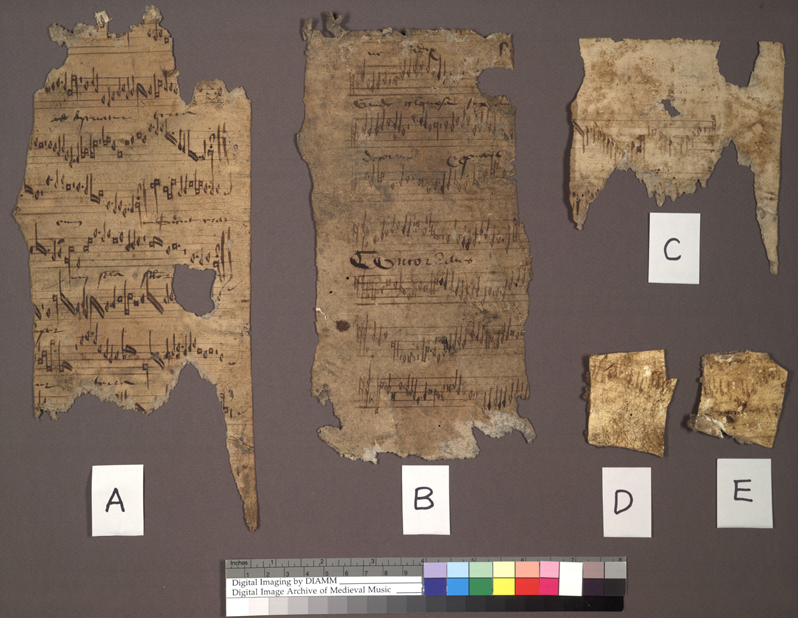

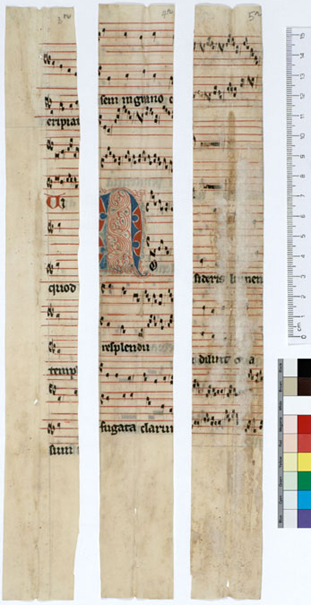

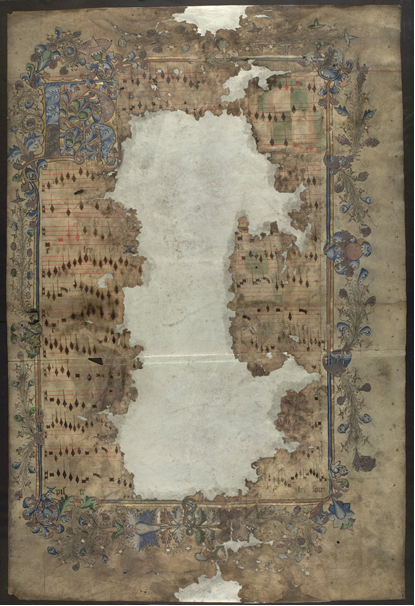

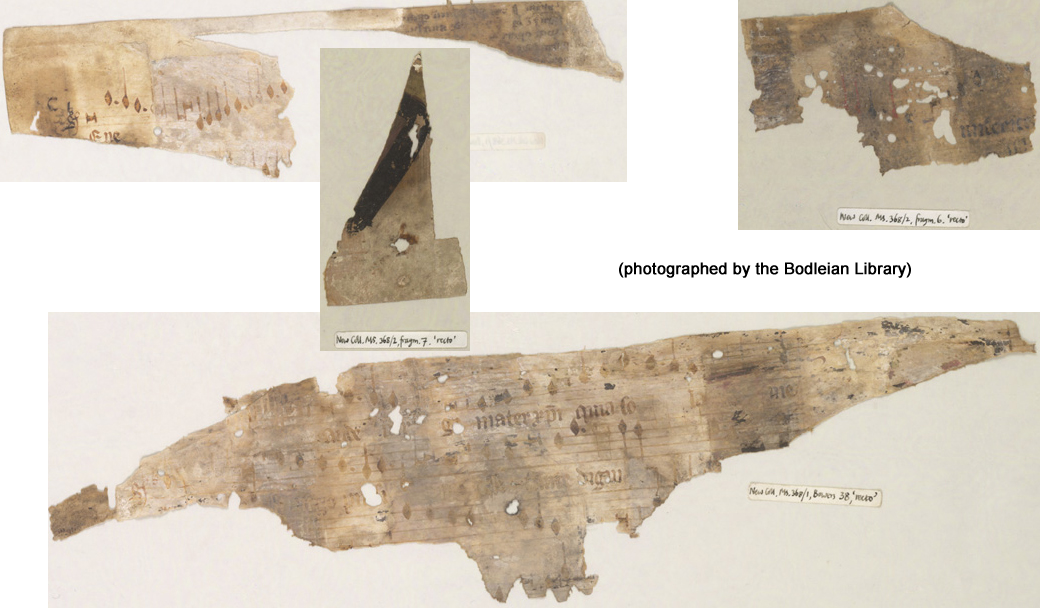

§ 2 There were a number of reasons for this decision: firstly

the collection was of fragments, often in poor condition,

ranging from pieces the size of a large postage stamp to

several complete bifolia. These were in danger from natural

decay, damage hastened by medieval 'vandalism', and poor

husbandry. Some which should have been included had been

stolen or mislaid. Study of the repertory as a whole was

nearly impossible due to geographical spread, and hampered

further by the often appalling condition of the sources. The

damage evident was almost all due to the re-use of the

parchment, but in some cases resulted from early

20th-century attempts at restoration. Some examples of the

types of challenges to transcription are seen below: the

first is the lining of a hat box, the second was used to

mend the inside of an organ case, the strips had been used

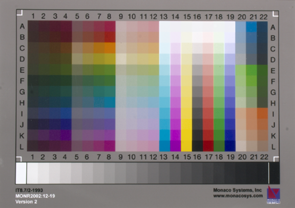

as quire-guards; the single surviving leaf of what was

clearly an extremely opulent choirbook was eaten away by

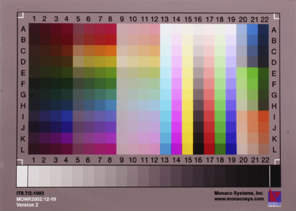

rats and mould, and the final group were scraped off a

ceiling, where they had been used as wallpaper backing.

Figure 1: GB-Ipswich, Record Office S1/2/403, recto

Figure 2: F-Rodez, Archives départementales de l'Aveyron, MS

J2001, fragments

Figure 3: GB-Cambridge, Jesus College MS QB 1(3) f.

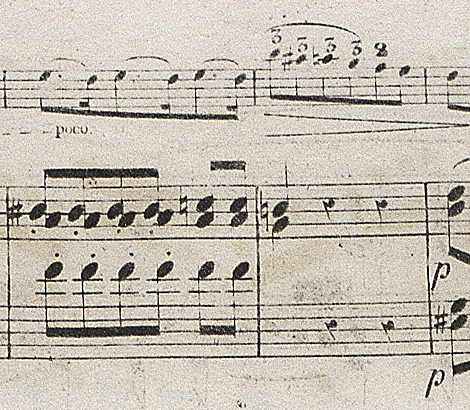

5r

Figure 4: GB-London, National Archives E163/22/1/3

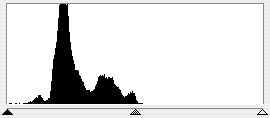

recto

Figure 5: GB-Oxford, New College MS 368, various folios

(photographed by the Bodleian Library)

§ 3 Bent and Wathey consulted with Marilyn Deegan (principal

technical advisor to DIAMM) and found that new digital

imaging and image-processing technologies might enable them

to manipulate the images they acquired, if they were of high

enough quality, to improve the readability of the texts, and

thus produce a volume of photographs that was more useful

than a conventional facsimile.

§ 4 Secondly, they believed that the cost of creating colour

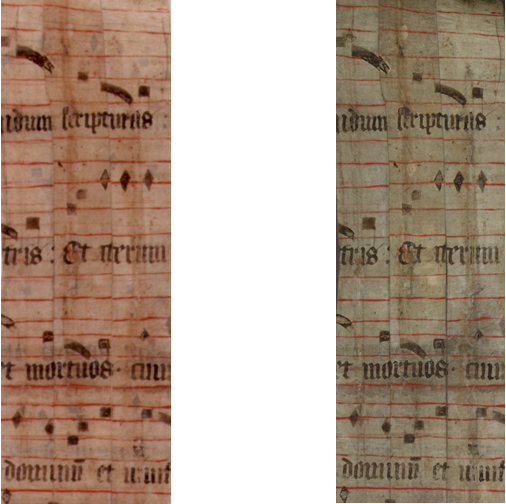

digital images would be the same as producing digital black

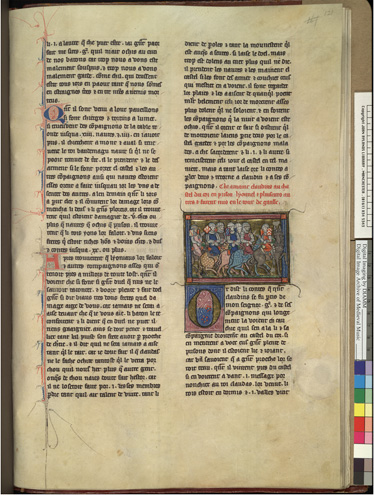

& white, and since much of this repertory utilises

different-coloured inks to represent different rhythmic

interpretations, colour was essential.

§ 5 A third reason for creating digital images came from their

experience with their own collections of slides,

transparencies and microfilms, gathered painstakingly over

nearly 40 years. These media were deteriorating; even

relatively new slides had discoloured, and every time a

microfilm or fiche was used it became slightly more

scratched. Their everyday use of computers suggested that

accessing images as digital objects would be far easier and

more productive than conventional means of studying

surrogates.

§ 6 Given the fragile state of the repertory to be studied, and

the imminent loss of much of the information it contained,

they felt that a permanent archive of some sort

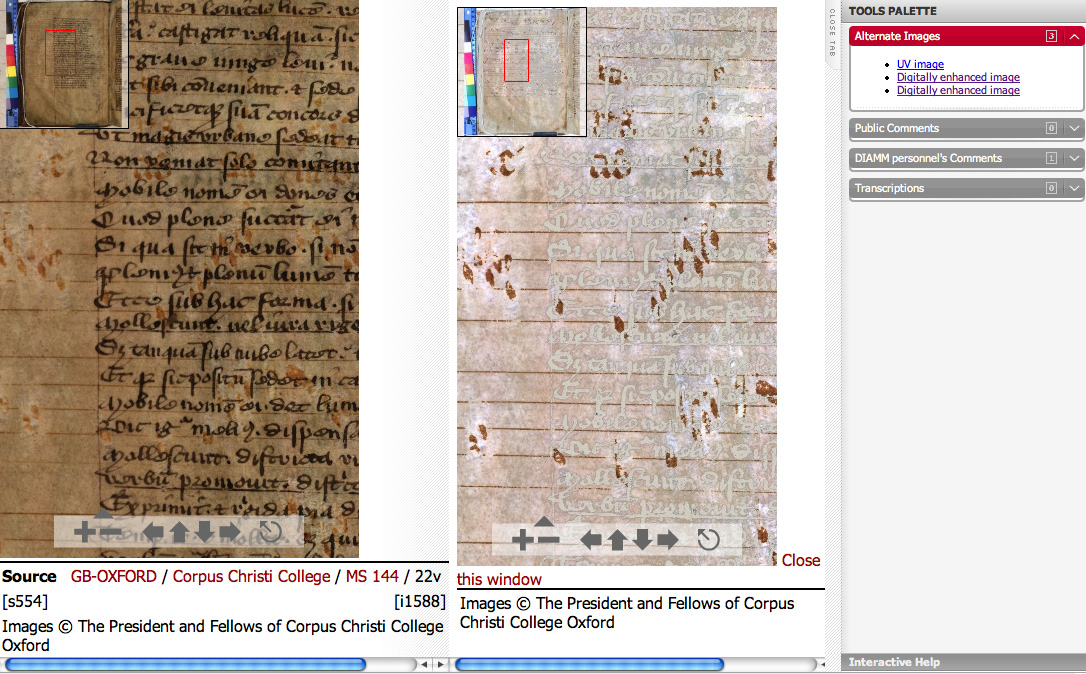

must be made to save a record of these

fragments before they were permanently lost due to further

deterioration. The project therefore immediately evolved

beyond the need to collect images for a publication, and

took its name from the archiving part of its work.

§ 7 In 1998 Bent and Wathey saw no particular need for a

website (research websites at this time were virtually

unknown). Once the project began though, it was decided that

a digital project should have an online presence, even if it

was only a brief front page. It is a measure of the

incredible speed with which the internet has become a

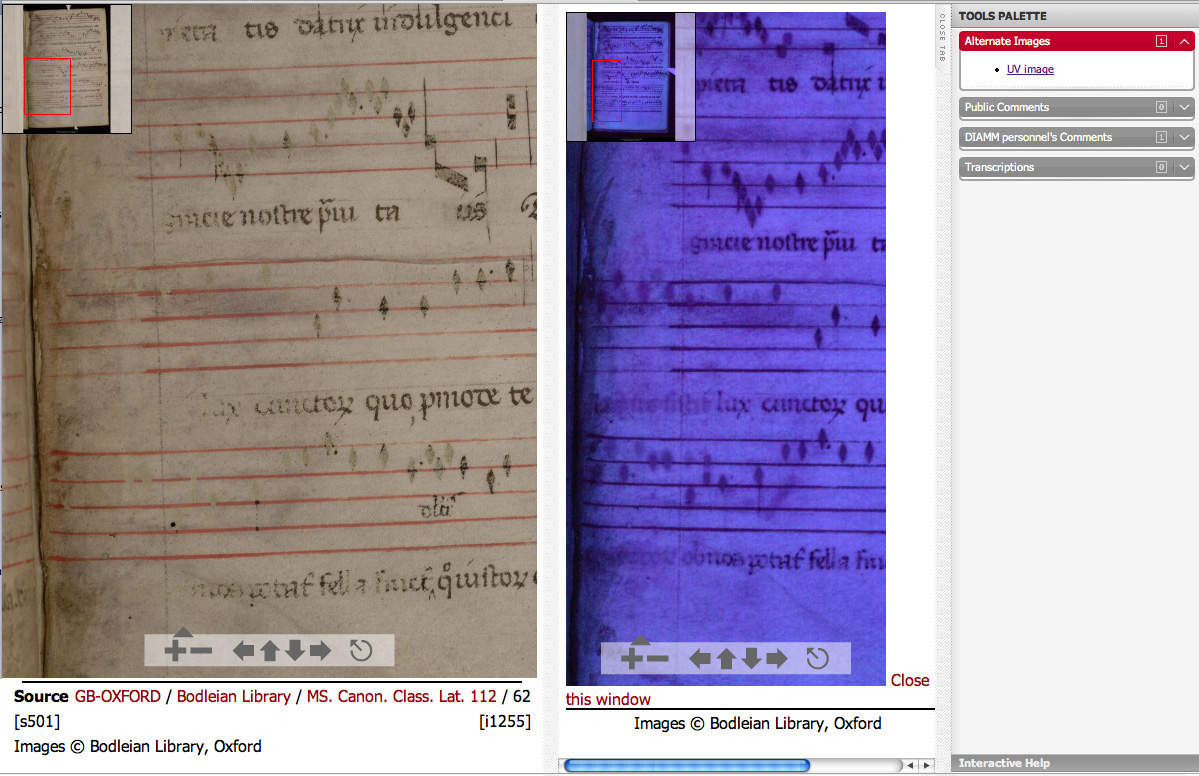

serious academic resource, and the importance it holds now,

that as recently as 1998 a website was considered frivolous,

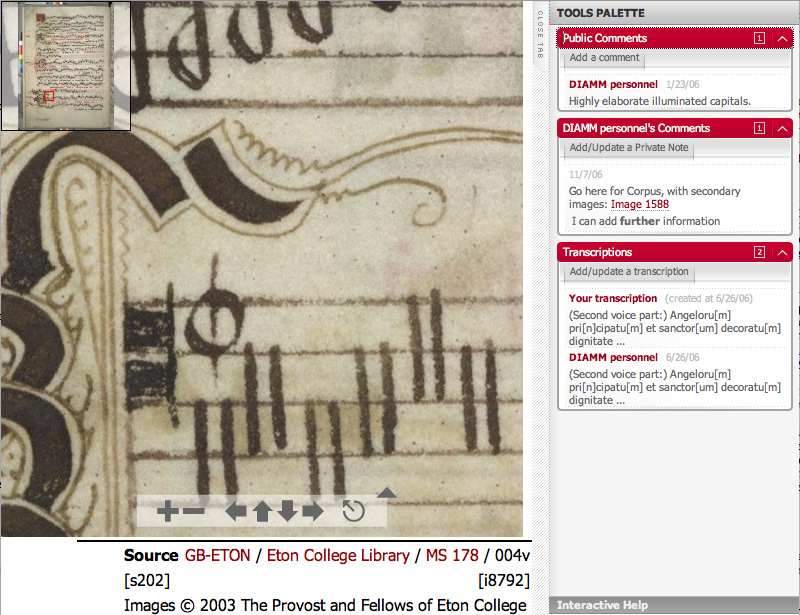

and 'archiving', as described at a British Academy

conference in late 1998, was discussed by many academic

projects in terms of how to store your word files, whereas

now a website is considered an essential part of any project

in reaching the wider research community.

§ 8 Failure to participate in the digital world is now frowned

upon: those without access to the internet are actively

discriminated against ("10% discount online" is a familiar

advertising catch-phrase); academics who fail to participate

in the digital medium, or who refuse to use digital

resources are in danger of being labelled dinosaurs, and

missing out on vital information or discourse, because now

that is the only medium in which that

information exists. DIAMM is such a resource: these sources

are geographically very widely scattered, and could never be

consulted side by side in the analog world. Some are

inaccessible through politics or geography, while others are

now considered too valuable or delicate to be consulted in

person, such as the Old Hall MS (British Library Add. 57950)

one of the most important surviving sources of English

Medieval polyphony, and Chantilly, Musée Condé MS564, a

crucial source of French Medieval polyphony, and one of the

jewels in the crown of Medieval France.

§ 9 Technology gradually impacting on the humanities began as

the driving force behind many academic digital initiatives

such as DIAMM; now technology is having to move to meet the

demands of an increasingly technically knowledgeable

academic community. When DIAMM first started digital

imaging, a large scanning back camera existed, but computing

available to projects such as this was not sufficient to

manipulate images of the size that it produced (280 MB).

Therefore, instead of buying the top-of-the-range camera,

the project started with one a step below, which produced 80

MB images, making it practical to consider software

manipulation with the resulting images. Within a few years

computing had caught up with the scanning backs, and the

equipment was upgraded to the larger camera, with a

corresponding increase in both the quality of the images,

and the complexity of the digital restoration that could be

undertaken.

§ 10 Storage has always been a critical component of DIAMM, and

the project has been extremely fortunate to have the support

of, and access to, the hierarchical file-server managed by

Oxford University Computing Services (OUCS). At present our

content occupies about 10 Terabytes of space. Using

uncompressed TIF format for the images increases storage

needs, but is preferable to a lossless compressed format

that may not be readable by contemporary software in a few

years time, or may require a migration process that alters

the data in the image. Since digital archiving is relatively

new, we still do not know whether our file formats will have

the longevity we hope for, but at present we follow best

practices and keep a weather eye on technology and software

developments.

§ 11 Grants from the Arts and Humanities Research Board (AHRB,

formerly the Humanities Research Board) in the UK have

enabled DIAMM to exploit its now-extensive expertise in the

field of high-resolution digital imaging and extend its

remit to include a broader range of fragments than the

original group, and also embrace the very considerable

corpus of complete and comparatively undamaged manuscripts

surviving throughout Europe, not just those on our doorstep

in the UK. Since the imaging equipment is completely mobile,

and our protocols are well established, we are able to

produce images of completely consistent quality in archives

as widely separated as London and Hikone in Japan, and we

have worked in archives throughout Europe.

§ 12 We have digitized manuscripts for a number of other

projects and individuals, musical and non-musical, medieval

and modern, ranging from 2nd-century Chinese scrolls to

Medieval mystery plays, Anglo-Saxon Charters to Jane

Austen's holograph (a representative list is available on

our website: http://www.diamm.ac.uk/content/access/partners/projects.html). We have also consulted to a number of projects such as

Chopin's First

Editions Online (CFEO – managed by the Centre

for Computing in the Humanities (CCH)) and have provided

restoration advice widely. The possibilities created for

research by imaging at this quality is discussed by Meg

Twycross in Virtual Restoration and Manuscript Archaeology: A case

study.

Image Quality

§ 13 Image quality is still a major issue in digital imaging,

since so many suppliers are still producing digital images

of appalling quality, believing that this is all that

digital cameras are capable of. Unfortunately these are not

amateur organisations: they are often the photographic

departments of major international research institutions.

§ 14 Several institutions have published information about

imaging standards:

§ 15 In spite of the availability of this sort of standard and

the reasons behind its creation, there is an extraordinary

level of ignorance regarding quality, and a surprising

inability to evaluate digital images and see problems which

should be obvious. An alarming number of institutions are

digitizing at spectacular speed, but still have not

attempted to calibrate any of their equipment, so none of

their images have accurate colour.

§ 16 One of the projects to which DIAMM acts as an imaging

advisor put a series of orders for high-quality digital

imaging in to a group of international libraries. Only the

British Library managed to meet the imaging specifications,

which were basic and simple, and designed to create a corpus

of consistent images to facilitate online comparative use:

- Images should be taken at 400 dpi resolution at actual

size;

- A colour scale and size scale must be included in each

image;

- The image must be saved in uncompressed TIF format,

with no JPG or other compression format used at any point

during the capture process;

- The colour profile of the capture device should be

embedded in the image;

- No unsharp mask, level adjust or any other process

should be applied to the image either during capture or

after;

- The picture must be in focus at pixel-for-pixel

view.

§ 17 There are other specifications which we would add in an

ideal world, but we have found that just getting the

supplier to meet this baseline standard for an acceptable

digital image is extremely difficult, so it is a waste of

time to ask for more.

§ 18 Despite clear quality specifications, the images received

in response to the orders were rarely acceptable in quality:

one library captured everything at 200 dpi, then used

image-processing software to 'increase the resolution' to

400 dpi: the result was a blurred image. In order to

increase resolution in this way, the software has to

interpolate new pixels between the ones that already exist.

This is done by inserting a pixel with a colour value

halfway between the colours of the pixels on either side of

the new one. If the original image has a white pixel

adjacent to a black one, the interpolated pixel will be 50%

grey, softening the previously sharp demarcation.

§ 19 Another library saved the images in compressed JPG format,

so that all the delicate gradations of colour were lost: JPG

is a 'lossy' compression format, so it destroys data by

storing colours that are nearly the same, as if

they are one colour. The software and degree of compression

defines how different the colours have to be before they are

treated as separate entities. The alarming aspect of JPG

compression is that the effects are usually not visible

until the image is closed and re-opened, by which time the

missing data has been irrevocably discarded.

§ 20 In the following segment of a b/w image, JPG compression

has been used for successive saves. The first image is as

the shot came off the camera (which unfortunately stores to

JPG by default, but at high-quality compression). The point

to note is the clear gradation from dark to light grey

across the shot (this is part of a beach – the rest of the

image has been cropped off to save space). The second image

has been subjected to low-quality JPG compression. It should

be visible that the gradation in grey has now changed from

smooth, into blocks with sudden shifts from one tone to the

next. The third image has had a further level of compression

used, and this is now almost unintelligible: the gradation

is almost entirely lost, 'blocking' has appeared, and there

is a 'watery' look to the picture caused by the loss of

detail.

Figure 6: Beach Image 1

Figure 7: Beach Image 2

Figure 8: Beach Image 3

§ 21 (If you find that you do not see a smooth grey on the first

picture, check in your monitor settings that you have your

display set to 'millions of colours' or high resolution. If

it is not, changing it will dramatically enhance the

appearance of everything on your computer screen. Most of

the samples in this article will not be informative unless

viewed at high resolution.)

§ 22 Archive quality digital images should not use JPG at any

point in the capture or delivery process since it is a

destructive process, and colours discarded by the

compression algorithm can never be recovered.

§ 23 The colour of many images is often incorrect in some way –

it is not unknown for the operator to correct the colour

appearance of the image while viewing it on an uncalibrated

screen. All uncalibrated monitors have a colour cast, so the

operator was compensating for the colour deficiency of their

own hardware, and falsifying the colour information in the

image, so that when viewed or printed on properly calibrated

equipment, the image had a colour cast. Other colour

problems have been caused by embedding the wrong colour

profile in the image, so the software displays the colours

as they would appear if scanned on one piece of equipment,

but the picture was scanned on something completely

different. Fortunately, where the archive complied with our

request to include a colour scale, we are able to see where

there is a colour problem, and it is often simply the

misapplication of a profile.

§ 24 The following examples are copies of the same image. The

first has the correct profile embedded: it should have a

grey border, but your screen may be mis-calibrated (most

are: they come out of the box like that). Unfortunately the

human eye compensates for colour casts: if you put on a pair

of green sunglasses in bright weather, the sky still looks

blue, even though it is not, because your brain is

compensating for the superimposed colour. This is called

chromatic adjustment. If you are used to viewing the world

via a poorly calibrated screen, the grey border of this

image may look grey, but if you hold a grey card up to the

screen, you should see the difference (assuming there is

one).

Figure 9: Calibration Target 1

§ 25 The second picture is the same image with the wrong profile

embedded. If nothing else you should be able to see that it

has changed colour. On a correctly calibrated screen the

colours in this second image would appear heavily saturated

and the grey border will appear pinky-grey.

Figure 10: Calibration Target 2

§ 26 (Simple instructions for checking and calibrating your

monitor may be found on the DIAMM website: http://www.diamm.ac.uk/content/access/check.html.)

§ 27 One institution found that nearly half of its camera

operators were colourblind to some degree, but they had

never been tested, and were not aware that they had

colour-deficient vision. This should not affect the ability

to take a good picture, but would make evaluation of colour

liable to error.

§ 28 (To find out if you are colourblind, take these quick

tests: http://colorvisiontesting.com/online%20test.htm.)

§ 29 More disturbing is the propensity of the operator in our

target institutions to fail to get the camera properly in

focus before shooting. The result is a soft-focus image,

which the operator believes 'can be corrected in

image-processing software' afterwards using the Unsharp

Mask. If the image had been in focus to begin with it would

not require any post-processing time, and sharpening simply

serves to put a bright corona around all the marks on the

page, including dirt or mould, and increases graininess, all

of which contribute to the image being considerably less

useful than it should be. An increase in graining, and

resulting 'flatness' of the picture is one of the imaging

flaws that many suppliers seem to find difficult to see.

§ 30 When DIAMM first started taking digital images and

approached libraries to digitize items in their collections,

several librarians said that they had seen digital images,

and didn't like them, so were reluctant to let us digitize

their holdings. Unfortunately, they had only seen bad

images. There are still widespread misconceptions about the

sort of quality you can get from a digital image, and these

are often due to a very poor understanding of how digital

images work. For example, many users of images do not

understand that reducing the size of an image to e-mail it,

means that it is no longer large enough to print at high

quality at that size. The relationship of the screen image

to the printer is ignored. The dots that any printer

produces on a piece of paper to make up an image are far

smaller than the grid of minute squares that make up a

computer monitor – at least three or four times smaller. We

view screen images at 72 dpi (dots per inch) or now more

usually at 96 dpi (as screen quality has improved), but to

print you need the image to be at around 300 dpi, or the

dots will not be close enough together to create sharp,

clear pictures. A high-quality digital image, whether

displayed on screen or printed can, and should, be

better than its analog counterpart.

§ 31 The next example (I will not embarrass the supplier by

identifying it) shows part of an image that has been

sharpened excessively to compensate for focus shortcomings.

It is grainy and in places (elsewhere on the page)

unreadable. If this is the quality the supplier expects from

their images, then it is hardly surprising that they

consider digital a poor substitute for analog.

Figure 11: Chopin 1

§ 32 The next image is acceptable in quality, apart from some

colour banding along the edges of the black lines indicating

a fault with the scanner that the library probably hasn't

noticed (or more likely in this case cannot afford to fix).

It had no colour or size scales, so it is not possible to

evaluate the colour, but the quality of the scan in focus

and clarity is excellent.

Figure 12: Chopin 2

§ 33 Another flaw in imaging that is generally corrected

post-capture, is poor lighting or incorrect exposure. An

over-dark picture can be lightened using a tool called level

adjust. However, doing this, which causes all the colours

present in the image to be 'spread out' creates gaps in the

colour spectrum represented, as well as moving the colour

values to new values, so the result again is a falsification

of the information in the image. The first histogram shown

here is of an uncorrected image, the second shows the gaps

in colour values caused by stretching the values out.

Figure 13: Histogram 1

Figure 14: Histogram 2

§ 34 The sort of imaging undertaken by DIAMM is not in any way

comparable to consumer-level digital cameras, although the

gap has started to close in the last year. A good high

street SLR camera is now capable of taking a picture at 8–10

megapixels (The new Canon 400D digital SLR takes 10

megapixel images). This will produce an image that can be

printed at A3 size at reasonable resolution, so the image

still looks clear and sharp in the details, even at that

size.

§ 35 DIAMM uses a PhaseOne PowerPhase FX scanning back, mounted

on a custom built focus box supplied by ICAM Archive

systems, specialists in archive imaging equipment. Images

have a maximum capture area of 144 megapixels and file sizes

are in the region of 280 MB (NB, not KB), whereas an 8

megapixel camera would produce 22 MB images if saved to TIF.

In order to archieve this sort of resolution, current

technology uses a camera with a digital scanning back on it,

since the cost of a sensor that size would be prohibitive –

such sensors do exist, but they are used in spy satellites

rather than general-user technology.

§ 36 A scanning back is not unlike a miniature flatbed scanner:

it has two rows of sensors which correspond to a quarter of

a pixel in size for each sensor element. Thus the FX uses

one row of 24,000 alternating red and green sensors, and

another row of 24,000 alternating green and blue sensors.

Each group of four pixels (red-green/green-blue) captures an

image of one pixel in size in the final image.

Figure 15: RGBsensor

§ 37 (There are twice as many green sensors as the other

colours, because green is the most difficult colour to see

and to capture.) The scanning back takes a strip image

12,000 pixels wide, then moves forward and takes another

strip, repeating the process a maximum of 12,000 times. The

result is glued together into a complete image by the

capture software.

§ 38 The main disadvantage of this technology is that each

picture takes a long time: one scan can take in the region

of 5 minutes, whereas with a single-shot camera back,

capture is instantaneous. High-resolution imaging is

therefore very costly in comparison with lower-resolution or

analog alternatives, although it still has the advantage

over analog that there is no requirement for film processing

or printing.

§ 39 Until recently there was a huge gap between the quality

available to the consumer market, and that used by archives

and by DIAMM. Only recently has the demand for bigger and

better imaging in the hand-held single-shot market given

birth to a new generation of professional digital capture

media exemplified by the 39 Megapixel single shot camera

backs produced by Hasselblad and PhaseOne. However, these

are still highly specialist professional equipment: one of

these digital sensors, with a camera and lense(s) of

appropriate quality on the front costs in the region of

£25,000–£30,000.

§ 40 For those who are interested, PhaseOne have announced that

they will not be developing any further scanning backs, and

future development will now be towards single-shot capture

equipment.

§ 41 To clarify the type of images the various cameras produce,

a snapshot of a page of an Arthurian manuscript from the

John Rylands Library in Manchester is given below,

photographed for Dr Alison Stones' Lancelot-Graal project.

Dr Stones, an art historian, was interested in the

historiated initials on each page. The MS page is quite

large: if we had photographed using a Canon EOS 350D (8

megapixels), this is the maximum size the miniature would

appear on screen, without enlarging beyond a one-to-one

pixel-to-pixel relationship:

Figure 16: GB-Manchester, John Rylands Library, MS 1

f.121

Figure 17: Canon EOS

§ 42 Photographed with a PhaseOne P45 39 megapixel single-shot

camera the on-screen image would have appeared like this:

Figure 18: PhaseOne P45

§ 43 For many users that would be more than sufficient. However,

with the project's main camera, the PhaseOne FX, one of the

largest of the scanning-back generation of digital sensors,

the detail possible, particularly in on-screen view, is

considerably greater:

Figure 19: PhaseOne FX

§ 44 What is the purpose behind imaging at this extraordinary

quality? The main reason had its origins in the group of

sources for which DIAMM was original conceived. There is a

considerable corpus of fragments of medieval polyphony

distributed around the world: access to them is often

difficult, and if the fragment is very small, the cost of

seeing it in person may be too high. Gathering these sources

together as b/w glossies or microfilms is a costly and

difficult process, and the outcome does not really give the

scholar materials that are good enough surrogates for

complex research, particularly if the original is damaged,

which it nearly always is. Part of the remit of DIAMM is the

reunification of a corpus which has become very widely

scattered over the centuries, and the provision of images of

a quality that will facilitate study of the document that is

significantly better than that offered by other

types of surrogates and has been shown in many instances to

yield more information than examining the original document.

By imaging at extremely high resolution, magnification alone

can reveal hidden data, but more importantly, the more

pixels we can cram into every inch of the original, the

better the chances of digitally repairing or restoring the

document.

§ 45 We take images directly from the original source, and not

from good surrogates such as Ektachromes or colour glossies,

since our resolution usually far exceeds that offered by any

surrogate. The following samples demonstrate the difference

between a scan of the original document and a scan at

similar resolution of a good colour photograph.

§ 46 The first difference is the colour: the photo scanned for

the left image was a few years old, but did not include a

colour scale, so we did not know that it had changed colour

with age. (It is possible that it had always had a pink cast

and hadn't changed with age, but without a colour scale

included in the picture we could not tell). We also didn't

know if the document had changed colour itself by the time

we reached it, as a colour scale would have supplied that

information (which is one reason that we insist on including

scales in all pictures). You may be able to see already the

difference in sharpness of the two images. The one on the

left used a UMAX high-resolution flatbed scanner to scan the

photograph, the one on the right was taken with the DIAMM

PhaseOne PowerPhase, imaging the source directly under

daylight balanced lighting conditions.

Figure 20: St Andrews University, Typ. GCA79.GR

(verso)

§ 47 When we enlarged the two scans (which were made at the same

resolution) the photograph became too fuzzy to be useful

very early on. Our digital scan however, because it was

properly focused, stayed crisp and readable right up to

one-to-one resolution on screen.

![Click for full-sized image of St Andrews University, Typ. GCA79.GR (verso) [Enlargement] St Andrews University, Typ. GCA79.GR (verso) [Enlargement]](/article/id/6965/file/103094/)

Figure 21: St Andrews University, Typ. GCA79.GR (verso)

[Enlargement]

Digital Restoration

§ 48 The fragments in our original remit only survive today

because they were re-used when the music went out of

fashion. Parchment and vellum had intrinsic value that meant

it was recycled in many different ways: at best it might be

used as a wrapper for other documents (in which case it

might be in reasonable condition); worse scenarios (for the

music) are to be found when the surface was scraped,

refinished and written over, so that the music is

palimpsest. Even more damage was caused if it was used as

paste-downs or strengthening for bindings. Some of the items

photographed are barely recognisable as parchment. Given the

parlous state of our core starting corpus, the extremely

high resolution was essential in order to examine the

manuscripts in fine detail – far finer detail than can be

seen with the eye, even with a magnifying glass. With this

sort of quality and colour separation, it was possible for

us to develop digital restoration techniques which have

returned to legibility documents for which the text was

believed permanently lost. It is this activity for which

DIAMM is best known in the musicological and wider

manuscript study community.

§ 49 Most image restoration activity is centred on the

restoration of damaged photographs, where the surrogate,

rather than the original, is the object of interest (e.g.

Disney's famous restoratin of their Snow White). Most of

these deal with historic photographs, but some have a more

serious application, such as improving medical imaging for

better diagnostic capability. Restoration of historic

photographs and glass plates has yielded a wealth of

historical data, but more recent history is also relevant,

since there are companies with specialise in 'improving'

early digital images There are a few projects involved in

using digital imaging as DIAMM does, to improve the

visibility of real objects. Scientists working at NASA

contacted DIAMM early on, to see if our work was

complementary, but their main interest was in developing

repeatable algorithms. Exchanging images and trying our

various techniques confirmed that repeatable techniques

would not work on DIAMM sources.

§ 50 There is a wealth of information on the web regarding these

types of restoration to be found by searching on the web. A

few examples are given here.

§ 51 Basics of our restoration techniques are described on the

project website, http://www.diamm.ac.uk/content/restoration/index.html. Unfortunately, the damage we are trying to restore in

our document corpus is such that no single technique works

for every document, or even for consecutive leaves in a

single manuscript. This puts us at a disadvantage when

attempting to disseminate the techniques we have developed

to a broad readership, or teach individuals what they need

to apply them to their own corpus of documents.

§ 52 Digital restoration was certainly a goal of the project,

but creating new software to do that was very definitely not

something we wanted to become involved in. The Centre for

the Study of Ancient Documents in Oxford (http://www.csad.ox.ac.uk/) found that they did

have to create their own software, but their needs were

quite specific, and could be applied across a very wide

group of sources. Our needs on the other hand changed for

virtually every page we examined. We were concerned

therefore that whatever software we chose should be widely

available and should have a very solid commercial support

base, so that it would not fall out of use and leave us high

and dry.

§ 53 Two commercial packages, Adobe Photoshop and Paintshop Pro

were considered, but Photoshop won out mainly because (at

that time) it offered something which no other software did:

the ability to create and save layers of work, much as

transparent overlays might be used with an overhead

projector. The file sizes grew with every overlay, but their

use did mean that processes applied to the underlying

document could be turned on and off, shuffled, or adjusted

in different ways.

§ 54 Photoshop offers a vast array of tools, most of which are

ignored in our restoration processes. However the power of

the software underlying those tools is essential for the

aspects of it that we do use. It is developed as an artistic

tool, and one which enables professional photographers to do

in the digital medium what they used to do in the darkroom.

It was certainly not conceived for digital restoration,

though it does the job extremely well.

§ 55 Key to restoration is the ability to select very specific

colours: JPG compression attenuates the colour spectrum in

an image, thus limiting the quality of restoration that is

possible. If an element in an image is enlarged

sufficiently, it is possible to see that what might appear

at first to be a black or brown mark, is in fact composed of

a very large number of colours, which can be separated out

when enlarged sufficiently. Photoshop is able to

differentiate electronically between colours which the naked

eye cannot perceive a difference in, and therefore this

allows the user to select colours which are all but

invisible, and darken them to increase readability. It also

allows the separation of colours which are nearly the same

so that, for instance, palimpsest text can be separated from

text written over it in ink of very similar colour.

§ 56 Most of our restoration work relies on the ability to

separate and define colours very accurately and in minute

detail. Once you have done that, there are a variety of

simple processes or tools which can be used to darken or

lighten text or dirt. Sometimes it is not even necessary to

select colours before using the lightening/darkening tools.

Most superficial dirt can be faded back, and underlying ink

brought to the fore by simply using the level-adjust tool.

§ 57 Techniques of image restoration is a vast subject, and it

is not possible to describe it in any detail here. As well

as the brief description on our website, DIAMM has published

an Image Restoration Workbook, written to accompany a

workshop where restoration techniques were taught. It may be

downloaded (free) from http://www.diamm.ac.uk/reports/Appx10.pdf

or http://www.methodsnetwork.ac.uk/publications/reports.html, and printed copies are available from the AHRC ICT

Methods Network, http://www.methodsnetwork.ac.uk/.

Unfortunately, due to copyright restrictions, the test

images used for restoration in the workshop are not

available for download. Some examples of the types of

restoration that have been undertaken, and the sort of

results that can be achieved are given below.

§ 58 The first example comes from the Shakespeare Birthplace

Trust. This bifolio is used as a wrapper around more

delicate paper legal documents. On the inside face the music

is clear and clean, but the catalogue description of the

outer side describes it as having no music visible.

Restoration has revealed a transcribable texted secular

song: the result is not intended to restore the manuscript

to its pristine state – that would probably not be possible

without considerable editorial intervention (or 'faking

up'), but it has rendered the content readable to a

relatively inexpert user level.

![Click for full-sized image of GB-Stratford, Shakespeare Birthplace Trust DR 37 Vol. 41 (back cover) [before] GB-Stratford, Shakespeare Birthplace Trust DR 37 Vol. 41 (back cover) [before]](/article/id/6965/file/103095/)

Figure 22: GB-Stratford, Shakespeare Birthplace Trust DR

37 Vol. 41 (back cover) [before]

![Click for full-sized image of GB-Stratford, Shakespeare Birthplace Trust DR 37 Vol. 41 (back cover) [after] GB-Stratford, Shakespeare Birthplace Trust DR 37 Vol. 41 (back cover) [after]](/article/id/6965/file/103096/)

Figure 23: GB-Stratford, Shakespeare Birthplace Trust DR

37 Vol. 41 (back cover) [after]

§ 59 A rebinding programme in the 1960s and 70s in Cambrai

resulted in the binder discarding the original endpapers of

a number of manuscripts, papers which preserved a lost

musical repertory from a dismembered manuscript. A number of

leaves did survive thanks to changes in policy in the

restoration bindery, but many are now only known as offsets

on the original oak boards. The digital image is flipped to

create a mirror version of the offset, then the dark or

colour writing is separated from the colour of the wood and

leather boards.

![Click for full-sized image of F-Cambrai, Bibliotheque Municipale MS C 647 (front board) [before] F-Cambrai, Bibliotheque Municipale MS C 647 (front board) [before]](/article/id/6965/file/103097/)

Figure 24: F-Cambrai, Bibliotheque Municipale MS C 647 (front

board) [before]

![Click for full-sized image of F-Cambrai, Bibliotheque Municipale MS C 647 (front board) [after] F-Cambrai, Bibliotheque Municipale MS C 647 (front board) [after]](/article/id/6965/file/103098/)

Figure 25: F-Cambrai, Bibliotheque Municipale MS C 647 (front

board) [after]

§ 60 The British Library and Bodleian Library manuscript and

early printed book collections are particularly rich in

endpapers, the one shown below, from the British Library was

trimmed to size to fill out the binding shape left

unoccupied by the leather turnovers. Layers of glue and

other dirt concealed not only music, but a significant

section of text, which has now been transcribed.

![Click for full-sized image of GB-London, British Library, Add. 41340 (H), f. 100v [before] GB-London, British Library, Add. 41340 (H), f. 100v [before]](/article/id/6965/file/103099/)

Figure 26: GB-London, British

Library, Add. 41340 (H), f. 100v [before]

![Click for full-sized image of GB-London, British Library, Add. 41340 (H), f. 100v [after] GB-London, British Library, Add. 41340 (H), f. 100v [after]](/article/id/6965/file/103100/)

Figure 27: GB-London, British

Library, Add. 41340 (H), f. 100v [after]

§ 61 Corpus Christi College, Oxford has a well-known collection

of medieval manuscripts, among them MS 144, containing the

poems of Geoffrey of Vinsauf. Vinsauf's text is easy enough

to read, but must be digitally removed in order to reveal

the musical palimpsest, which was discovered since the galls

in the original ink had left traces which were becoming more

visible with time.

![Click for full-sized image of GB-Oxford, Corpus Christi College, MS 144, f. 25v [before] GB-Oxford, Corpus Christi College, MS 144, f. 25v [before]](/article/id/6965/file/103101/)

Figure 28: GB-Oxford,

Corpus Christi College, MS 144, f. 25v [before]

![Click for full-sized image of GB-Oxford, Corpus Christi College, MS 144, f. 25v [after] GB-Oxford, Corpus Christi College, MS 144, f. 25v [after]](/article/id/6965/file/103102/)

Figure 29: GB-Oxford,

Corpus Christi College, MS 144, f. 25v [after]

§ 62 There are ethical consideration in restoration: the tools

available in Photoshop allow a type of restoration that

relies heavily on editorial judgement. In the next example,

the damage caused by writing on the reverse of the leaf

burning through the paper has been 'cloned' out, by

replacing the damaged areas with segments of undamaged parts

of the page (the third image shows the cloned 'patches'

which have replaced ares of the original, showing the extent

to which areas the document is no longer a true

representations of the source). Where this is just a case of

eliminating material which is obviously show-through, or

burn-through, there is less likelihood of introducing

errors. However there are places where the editor 'repairs'

damaged musical notes, replacing them with what s/he

believes should be there, and that may not be correct. In

which case, the result is misleading. This particular

document could be repaired much further, but this is about

as far as the editor can go without making decisions which

cannot be based on what can be seen

![Click for full-sized image of I-Bologna, Civico Museo Bibliografico, MS Q15, f. 23 (detail) [before] I-Bologna, Civico Museo Bibliografico, MS Q15, f. 23 (detail) [before]](/article/id/6965/file/103103/)

Figure 30: I-Bologna, Civico Museo Bibliografico, MS Q15, f. 23

(detail) [before]

![Click for full-sized image of I-Bologna, Civico Museo Bibliografico, MS Q15, f. 23 (detail) [after] I-Bologna, Civico Museo Bibliografico, MS Q15, f. 23 (detail) [after]](/article/id/6965/file/103104/)

Figure 31: I-Bologna, Civico Museo Bibliografico, MS Q15, f. 23

(detail) [after]

![Click for full-sized image of I-Bologna, Civico Museo Bibliografico, MS Q15, f. 23 (detail) [cloned patches] I-Bologna, Civico Museo Bibliografico, MS Q15, f. 23 (detail) [cloned patches]](/article/id/6965/file/103105/)

Figure 32: I-Bologna, Civico Museo Bibliografico, MS Q15, f. 23

(detail) [cloned patches]

§ 63 This is the type of virtual restoration undertaken by

Fotoscientifica, a commercial organisation in Parma, Italy

(http://www.fotoscientificarecord.com/). Fotoscientifica is

the only major organisation successfully restoring documents

through digital imaging, but unlike DIAMM does it through a

combination of compounded multiple images of each page,

followed by detailed and painstaking post-processing work to

render a result that is not merely readable, but attempts to

restore to the original state of the document (in so far as

that is possible to determine). As such, their processes are

extremely costly, and require the document to remain under

the lights and handling conditions for a significant amount

of time. Lacking the luxury of infinite funding, DIAMM

concentrated on making our documents readable, though not

necessarily beautiful, but much of our work is comparable

with that produced by Fotoscientifica. Fotoscientifica's

work in restoring documents with the sort of burn-through

shown above is, however, spectacular, and well worth a

visit. (Follow the links from http://www.fotoscientificarecord.com/: Cosa

facciamo, then click on the 3rd image in the bottom row of

samples – Documenti con scritte acide to see some samples of

their work).

§ 64 The main concern of our depositors was not that their

images might be stolen, but that their sources might be

misrepresented in some way. This led us to change our mode

of restoration: previously we had attempted to restore using

'naturalistic' colours similar to the original inks, mainly

by darkening or lightening particular colour selections, but

this could be mistaken for the actual appearance of the

source in some cases. Restorations on a Florentine complete

palimpsest manuscript was found to be far more successful

when the material that we wanted to restore to readability

was coloured an unlikely colour such as green or purple.

Although the same colours were selected in each restoration,

the coloured results were far more readable than those using

natural browns, and this technique had the advantage that

there was no longer any possibility that someone could

mistake a restored version for the original.

![Click for full-sized image of II-Florence, Archivio di San Lorenzo MS 2211, folio 82v [before] II-Florence, Archivio di San Lorenzo MS 2211, folio 82v [before]](/article/id/6965/file/103106/)

Figure 33: II-Florence, Archivio di San Lorenzo MS 2211,

folio 82v [before]

![Click for full-sized image of II-Florence, Archivio di San Lorenzo MS 2211, folio 82v [after] II-Florence, Archivio di San Lorenzo MS 2211, folio 82v [after]](/article/id/6965/file/103107/)

Figure 34: II-Florence, Archivio di San Lorenzo MS 2211,

folio 82v [after]

Delivery

§ 65 DIAMM has become a significant collaborative effort between

Medieval scholars and those with technical expertise,

resulting in the creation not only of an image archive of

exceptional quality images of European medieval music

manuscripts, but a delivery system that allows the research

community, and other users, to access these images with

ease.

§ 66 Early on in the project scholars discovered the offline

archive (established purely for preservation purposes) and

started to ask for access to the images, preferably on the

internet, which was fast becoming a natural means for

communicating data without distance limitation. In 1998

internet resources in the humanities research community were

very limited. Many of the libraries whose documents we had

digitized did not have access to the internet at all, and

were naturally very suspicious of this medium. The rights in

the images we had created remained with the owners of the

documents, a policy which was in some cases solely

responsible for the owner agreeing to allow us to digitize

their materials. Despite initial misgivings every one of the

UK libraries, and many of the European ones that we asked to

allow their images to appear in our online resource agreed.

§ 67 Suddenly a corpus that had only ever been studied in

isolated pockets, and usually only by senior scholars who

had the finances and commitment to the corpus to gather

surrogates for themselves, could be studied by anyone –

academic or not – from their desktop.

§ 68 The first website was designed only for a small number of

sources, and using the best technology available at the time

(PDF – portable document format) that would allow zoom and

rotate functionality. In order to get moderate resolution,

the user had to wait for the whole PDF to download before

they could view the image, several minutes in some cases,

particularly with dial-up access which was then the standard

for non-university spine sites. The Andrew W. Mellon

Foundation funded a major scoping study to develop a system

that would allow us to deliver high-resolution images at

speed, as well as accompanying them with metadata that had

been absent from the original website, since it was

originally only intended for specialist users.

§ 69 Several successive grants from the Foundation have

facilitated the development of a feature-rich web delivery

system for our image collection, and the expansion of the

metadata resource beyond only those manuscripts in the image

archive. Web-delivery is managed by the Centre for Computing

in the Humanities at King's College London, where browser

technology is constantly pushed to its limits to enrich the

online research environment in a number of music projects.

As an academic department they are committed to open-source

development, but if a piece of software does the job better

than anything else it will not be ignored simply because it

is not open source.

§ 70 Most of the website is accessible to non-registered users:

project information, notes on image restoration, library

address lists, the source lists and metadata, as well as

access to page-images of the printed catalogues and

electronic versions of the catalogue texts. Interested

readers may consult these parts of the website at any time:

www.diamm.ac.uk.

§ 71 The most important aspect of the new development was the

implementation of the Zoomify viewer (www.zoomify.com) to

display the images. We are now able to present registered

users with the full size (up to 320 MB) images, which

download instantly, and can be zoomed and panned in a

full-screeb window with little appreciable time delay. (The

full size images may only be accessed by registered users,

due to rights-protection requirements of depositors.) A

number of image-based resources now use this software,

including most major art collections and auction houses

worldwide.

§ 72 The size of image viewed, in the case of DIAMM, is limited

only by the user's screen size. Several windows can be

opened simultaneously, allowing side-by-side comparison and,

thanks to consistent imaging standards, true comparison is

possible in this medium. A recent adjunct to the

image-viewer is the list of 'secondary' or 'alternate'

images which now appears in the toolbar. These are UV,

watermark (using a light sheet) or restored versions of the

same page, and clicking on the link brings this image up in

the same viewer so that they can be compared side-by-side at

similar or different magnifications. The next phase of

development will see much wider exploitation of this tool,

which has considerable features which we are not yet using.

One department which has experimented with the possibilities

in Zoomify is the University Of Melbourne's Educational

Technology Services. This is by no means an exhaustive selection, but it

gives some idea of the flexibility of this tool.

§ 73 Zoomify is not Open Source. Neither, in all probability, is

the browser being used to read this. Nor is the word

processor or PowerPoint software that are relied upon so

heavily for everyday work. Some open-source Java-based

viewers have been developed which claim the same

functionality as Zoomify, but are much slower, since Zoomify

uses the Flash plugin. Our decision to use this

non-open-source software is based on functionality and the

increased availability of our resource to the end-user that

it provides. Since Flash is now installed as standard in

most browsers, the user does not need to download anything

to access our images. Since this part of the website is not

viewable to non-registered users, I have provided some

screenshots to demonstrate some of the facilities available.

§ 74 Workshops with musicologists led to the design of several

tools to accompany the images: the first (designed

principally by John Bradley at CCH) allows the user to

create personal annotations which attach to an individual

image, persist between login sessions, and are not visible

to other users. The tool includes a small set of formatting

commands (activated by clicking an icon), and the facility

to paste in source or image reference numbers from other

windows (the numbers are given beneath each image) which

become live links to open other source descriptions or

images. Extending this tool, we provided a nearly identical

tool, but one which is visible to any user of the site, thus

creating the facility for an open discussion forum, though

not on a Wiki model (suggestions that we implement a Wiki

forum are being considered in the next phase of work).

Finally we added a text transcription tool based on the same

engineering as the commenting tools. We are gradually adding

full text transcriptions for all the sources in the

collection that will eventually be fully searchable both in

original and standardized spelling; this tool allows users

to contribute to the work of transcription, and their

contributions will be moderated before incorporation into

the search system.

Figure 35: DIAMM Website

§ 75 The location of any comment made by a user is saved in a

'MyDIAMM' area, which only the logged-in user can see. This

creates pick-lists (like a shopping basket) of images or

manuscripts which circumvents the original search or browse

process necessary to locate an image.

§ 76 Where there are secondary images that supplement an

original, such as ultra-violet or restored versions of a

leaf, a further tool appears on the palette, offering a list

of secondary images which, when clicked, split the main

viewer to leave the original image on the left and present

the secondary one on the right. The two images can be panned

and zoomed independently.

Figure 36: DIAMM Website

Figure 37: DIAMM Website

§ 77 The database behind the online resource has grown immensely

in the last two years. Originally it was an administrative

tool that allowed us to keep track of our imaging work, and

store sufficient metadata that we would know when on site

whether we had been given the correct manuscript. Now, it is

the repository for a massive metadata resource covering all

medieval music sources, not just those we have photographed.

§ 78 The database has been populated directly from the contents

of two multi-volume printed catalogues which are normally

only available in libraries. Although the content is fully

integrated into the database, we have provided users with

the facility to access page images of the original

catalogues, and browse any volume page by page. We have

found that the website is visited more frequently by

scholars accessing the catalogues than by those searching

for images, highlighting a user requirement that had not

been anticipated, nor specifically raised in our workshops

or user-group surveys.

§ 79 The search engine is currently constructed on standard

principles, but relies on a source-based access method. For

users who are not familiar with searching sources by the

library where the manuscript is housed, the search engine is

being expanded and will cover a much broader set of criteria

that should enable users to create the sort of search

results that are not possible outside the digital medium.

§ 80 At present this is an image-based resource, but the next

phase of development (2007-9) will transform this into 'an

information resource with images'. Users will be able to

create inventories for manuscripts (surprisingly something

that is not available for all catalogued manuscripts),

source lists for composers that cross the boundaries of

single manuscripts, and other personalised research

materials which will be retained in their personal workspace

between login sessions, and may be made available to other

users if required.

§ 81 Online Registration will be in place in early 2007, but

presently creation of a user-account still requires the

completion of a hard-copy access agreement which has to be

posted to the project manager. At the moment the resource is

free of charge, but one of the conditions of our funding is

that by the end of 2009 we are self-sustaining, and

inevitably that is going to involve the implementation of

some sort of charging model – probably for the research

metadata and tools: we intend to keep access to the images

free.

Collaborations

§ 82 DIAMM has become a significant information repository, and

over the years has developed close ties with other

musicological projects overlapping or dovetailing in content

and repertory. Plans for the next phase of work include the

collaboration of a number of libraries which house books

originally created in the Alamire workshop in the

Netherlands. The Alamire books are a famous example of a

nearly complete corpus of richly illuminated manuscripts,

which now belong to libraries in Jena (the largest group),

Vienna, s'Hertogenbosch and elsewhere. DIAMM will be

bringing these collection-holders together to establish a

project that will seek funding to digitize these sources and

virtually re-unify them through the DIAMM website, where

they may all be accessed, even though the original images

will reside on the server of the participating institution.

§ 83 An evolution in metadata management is about to take place

with DIAMM and four other major metadata creators in the

musicology field: Oliver Huck's Die Musik des

Trecento database and variorum representation project

based in Jena and Hamburg; Theodor Dumitrescu's Corpus Mensurabilis Musice

Electronicum in Utrecht, Thomas Schmidt-Beste's

motet database in Heidelberg and the University of Bangor

(early samples of this can be seen at http://www.arts.ufl.edu/motet/); and the

Chanson

database at the University of Tours.

§ 84 These projects plan to create a single collaborative

database, dealing not only with text metadata, but also with

newly created searchable music incipits (this

last item is only now possible with the XML-based software

developed by Dr Dumitrescu, seeTed Dumitrescu:

Corpus Mensurabilis Musice 'Electronicum': Toward a

Flexible Electronic Representation of Music in Mensural

Notation, Computing in Musicology 12 (2001):

3-18), and links to the DIAMM image corpus. The result will

be a major distributed database, populated by the

participating projects, but residing in a centralised

location, and queried by the custom front-ends of each

project. After the pilot phase of development and content

population in 2007, a small number of archives who have

expressed a wish to participate in metadata sharing and the

establishment of metadata standards for music manuscript

description (including the Bayerische Staatsbibliothek) will

join the initiative as test participants.

§ 85 The project team is particularly anxious that the resource

they have created should be exploited more widely. We have

therefore invited a number of projects for which we have

done imaging work to display their images through the DIAMM

website. DIAMM has 'unlimited' server space, a facility

rarely available to collection holders or small projects,

and the website provides a rich research environment in

which to present images. We are therefore actively

soliciting deposits from other Medieval projects who need a

delivery system, but whose funding does not permit them to

develop a system as complex as that offered by DIAMM. It

will be possible to define independent projects within DIAMM

so that it retains its identity, but the tools and features

will be consistent across the resource.

§ 86 At present there would be no charge for inclusion, but the

depositor must provide a certain level of metadata to

accompany their images, and must negotiate the rights for

online delivery with the document owner(s). (If you need

assistance in negotiating rights please contact DIAMM for

advice.)

A last gripe

§ 87 DIAMM is held back by the limitations of the web but

propelled forward by the needs of our community. In

providing this resource, research methods have changed, and

continue to evolve pro-actively and in response to the

potential of the online medium. The rapid advance and

emergence of new web and digital technology is a constant

challenge to long-term planning and sustainability, and we

rely heavily on our technical partners at CCH to keep the

resource we have created from stagnating. Our intention was

to create images that would stand the test of time, and so

far they are doing that, although we are still faced with

the unknown of digital longevity, storage media and file

formats. The project has expanded in every direction since

its inception, and continues to do so both in response to

technology and to the needs of the wider research community

that it serves.

§ 88 Digital imaging has been around for quite a while now,

leading to a false impression of knowledge or skill, but is

really still in its infancy: too many archives are setting

up imaging with little or no expertise and without the

appropriate backing in such basics as colour calibration:

their staff cannot tell the difference between a good image

and a bad one; in some cases they rely on outside suppliers

who QA their own work and tell the library that they are

getting something good (which they probably believe), when

in fact they are getting something appalling. Recently

someone involved with a research project remarked that they

were thinking of buying a digital camera (a PhaseOne P45, so

not an idle outlay) and starting to take pictures of

manuscripts themselves, despite the fact that they had no

idea what was required to take a good image. There is still

the perception that archive imaging is merely a question of

pressing a button (like holiday snapshots), and the ghastly

consequences of this complacency are to be seen all around

us.

§ 89 Since its inception DIAMM has had to swim against a tide of

misinformation presented in the guise of 'expertise', or

quality expectations based on poor exemplars. Particularly

frustrating for DIAMM is that an archive will often refuse

to allow better quality imaging to be undertaken if a

manuscript has already been photographed, thus leaving an

artefact to deteriorate with no accurate,

preservation-quality record having been made of it. In some

cases, the results produced by a supplier have put off the

archive from ever having any further imaging done, and they

assume that DIAMM produces the same poor images that their

first encounter produced.

§ 90 Although there is nothing that can be done to address the

problem of visual acuity in evaluating digital images, a

number of projects and digital image producers recognise

that the lack of a universally-accepted standard is a

barrier to progress in improving imaging quality,

particularly with documents for which there is perhaps only

one chance to get a digital image. In collaboration we

intend to produce a standard that can be disseminated with

the backing of major institutions to establish benchmark

procedures that will ensure a certain level of quality for

all archive imaging. The basic 'rules' are listed above

("Image Quality"),

and we hope to publish and disseminate our paper on

standards during 2007.

Works cited

More about DIAMM:

The DIAMM web site.

Marilyn Deegan and Julia

Craig-McFeely. 2005.

Bringing the Digital Revolution to Medieval

Musicology: The Digital Image Archive of Medieval Music

(DIAMM). RLG DigiNews Jun 15, 2005.

Andrew Wathey, Margaret Bent, Julia Craig-McFeely. 2001.

The Art of Virtual Restoration: Creating the

Digital Image Archive of Medieval Music (DIAMM)

The Virtual Score: Representation, Retrieval Restoration:

Computing in Musicology 12, published by CCARH (Stanford,

California) and The MIT Press (Cambridge, MA, London),

pp 227-240.

Reports on workshops and other studies by DIAMM funded

by the Andrew W. Mellon Foundation can be accessed at http://www.diamm.ac.uk/reports/.

Rights and intellectual property management when

photographing and delivering third-party images: http://www.diamm.ac.uk/reports/June.pdf

Metadata for description of music manuscripts http://www.diamm.ac.uk/reports/DTD.pdf.

Sustainability: http://www.diamm.ac.uk/reports/Appx06,

http://www.diamm.ac.uk/reports/Appx07,

http://www.diamm.ac.uk/reports/Appx08.

Centre for Computing in the Humanities, King's College

London (CCH) http://www.kcl.ac.uk/cch/.